Build your AI agent

Train with your docs

Upload pdf, txt, markdown,

JSON, web pages.

Interact with the world

Easily connect your agent

to external APIs and apps.

Choose your models

Use commercial or open

LLMs and embedders.

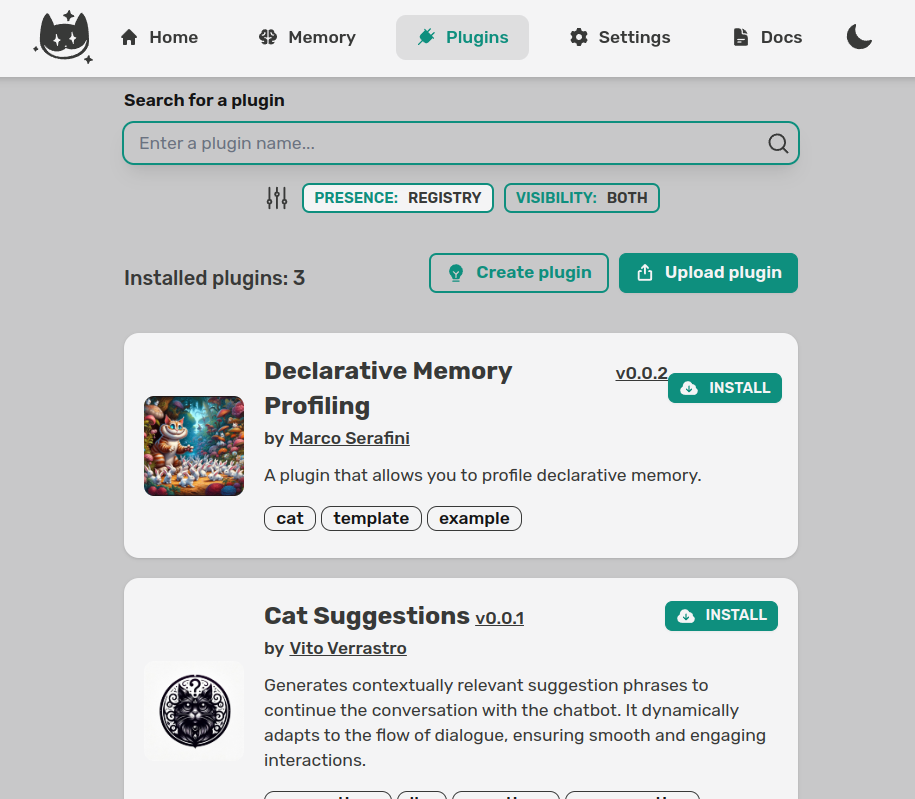

Plug & Play

100% dockerized,

with live reload.

Easy to Extend

One-click install plugins

from the community registry,

or write your own.

Smart dialogues

Cutting edge conversational skills

with hooks, tools (function calling)

and forms.

Developer experience

services:

cheshire-cat-core:

image: ghcr.io/cheshire-cat-ai/core:latest

container_name: cheshire_cat_core

ports:

- 1865:80

volumes:

- ./static:/app/cat/static

- ./plugins:/app/cat/plugins

- ./data:/app/cat/dataCode language: YAML (yaml)Docker based

The Cat is a single container, to easily integrate in your architecture alongside:

- Reverse proxy (Caddy, Nginx)

- Vector DB (Qdrant)

- LLM runner (Ollama, vLLM)

- Application (Django, WordPress)

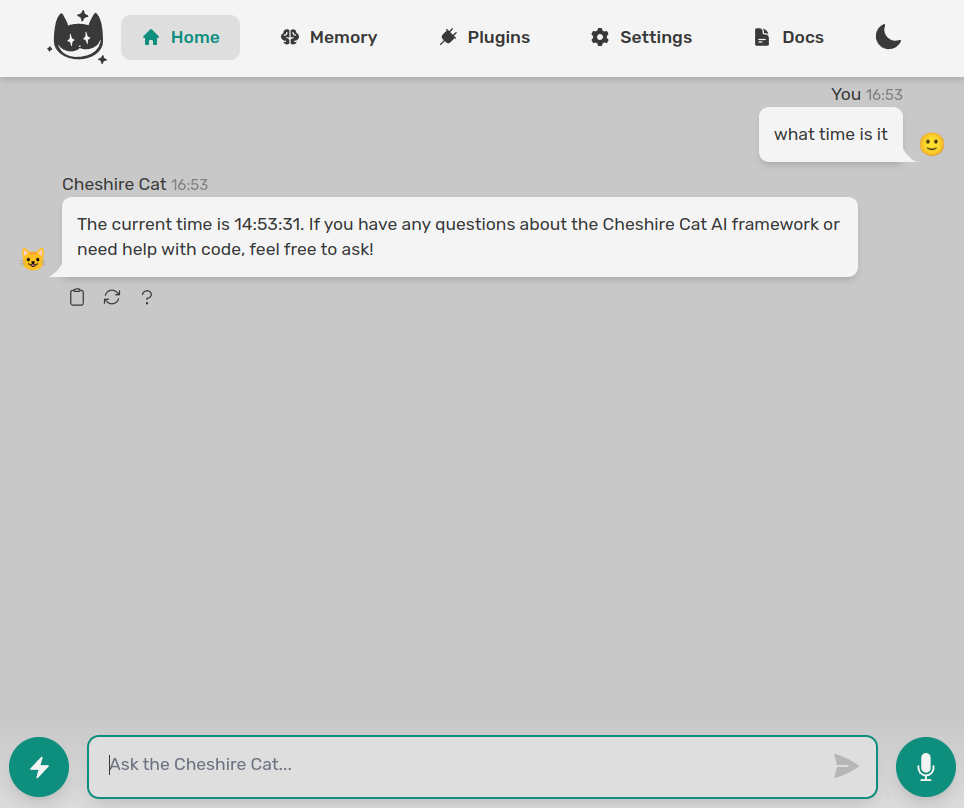

Admin panel

Manage your installation with the admin panel, available at first launch.

- Chat with your agent with live reload at code changes

- Install and activate/deactivate plugins

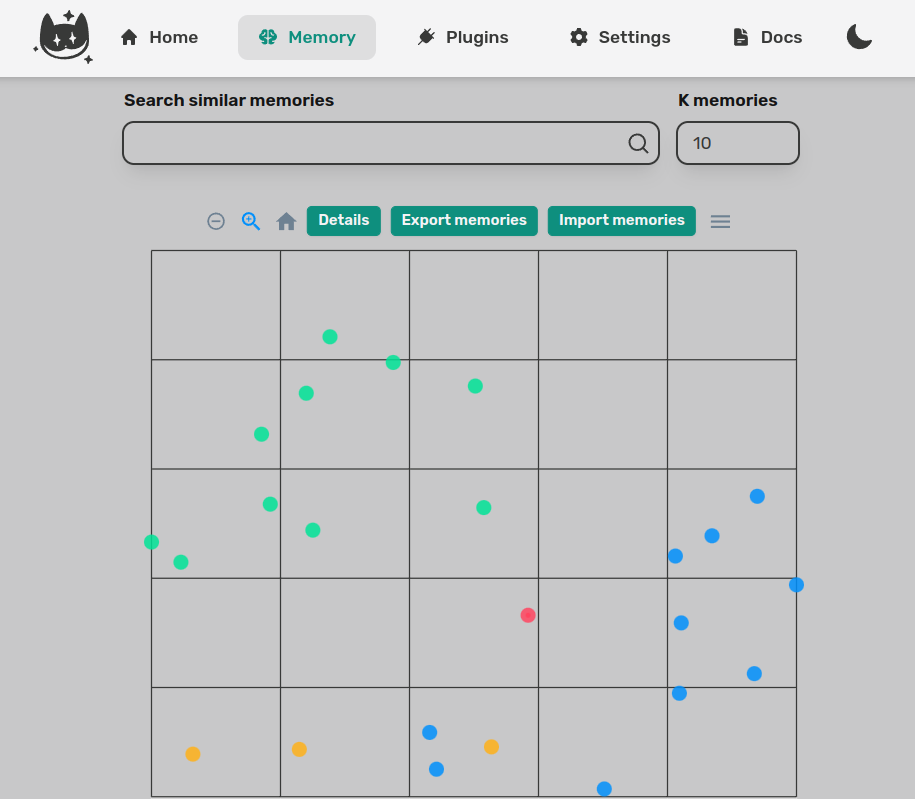

- Visualize and manage memory contents

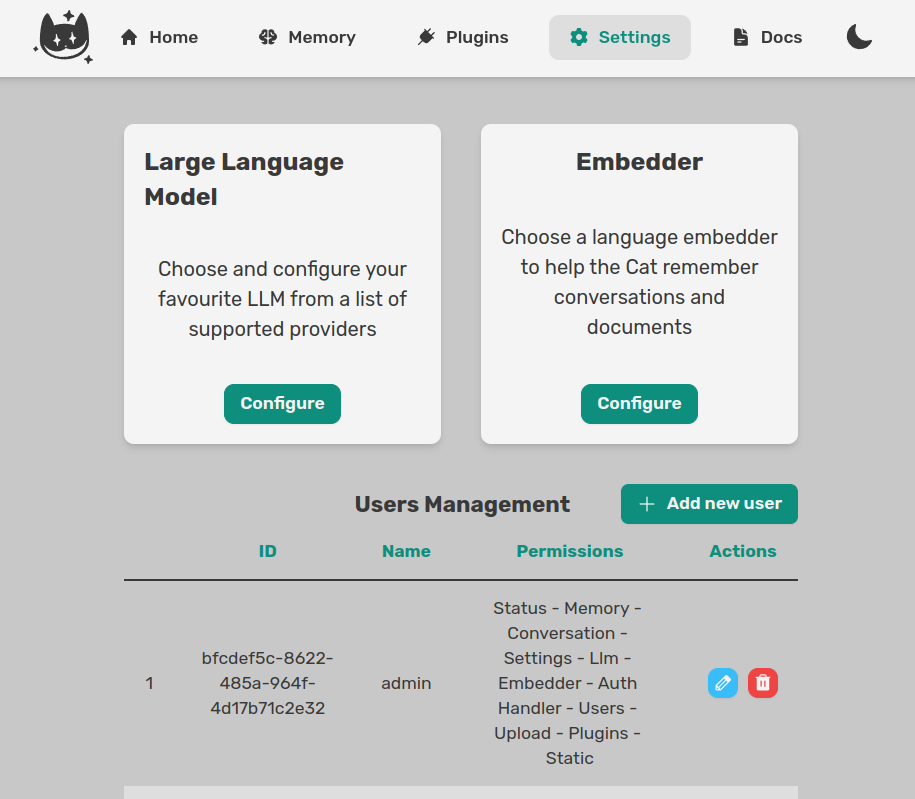

- Configure LLM and embedder

- Manage your users

// Meanwhile, in the browser...

import { CatClient } from 'ccat-api'

const cat = new CatClient({

baseUrl: 'localhost',

userId: 'user',

//... other settings

})

cat.send('Hello kitten!')

Code language: JavaScript (javascript)Extensive http and websocket API

Our framework is microservice first, the best scenario if you want to add a conversational layer to pre-existing software.

- Endpoints for LLM, embedder, vector memory, uploads, settings, users

- Websocket chat with token streaming and notifications

- Community built clients in most used languages

- You can add endpoints via plugin

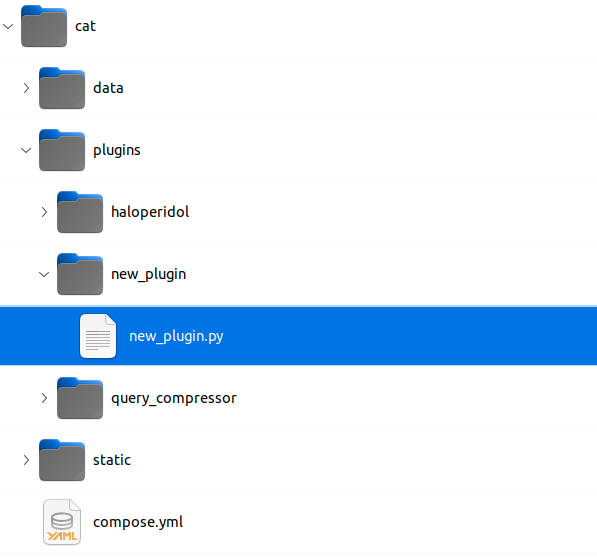

A plugin is just a folder

Focus on your agent, which is already online before you even start coding. Forget the OOP hell you probably experienced with other frameworks.

To create a plugin:

- Create a folder in `cat/plugins`

- Create a python file in the folder

- Add hooks, tools and forms

- Debug in the admin chat with live reload

from cat.mad_hatter.decorators import hook

@hook

def agent_prompt_prefix(prefix, cat):

prefix = """You are Marvin the socks seller.

You reply with exactly one rhyme.

"""

return prefixCode language: Python (python)Hooks

Customize your agent with event callbacks. Things you can do:

- Change the system prompt

- Inspect/edit inbound and outbound messages

- Fully customize the agent

- Create pipelines for memory and uploads

Tools

With tools, you can enable LLM function calling:

- Manage domotics

- Call a REST API

- Query a DB

- Integrate with a symbolic reasoner

from cat.mad_hatter.decorators import tool

@tool

def socks_prices(color, cat):

"""Get socks price. Input is the sock color."""

prices = {

"black": 5,

"white": 10,

"pink": 50,

}

price = prices.get(color, 0)

return f"{price} bucks, meeeow!"Code language: Python (python)from pydantic import BaseModel

from cat.experimental.form import form, CatForm

class PizzaOrder(BaseModel):

pizza_type: str

phone: int

@form

class PizzaForm(CatForm):

description = "Pizza Order"

model_class = PizzaOrder

start_examples = [ "I want pizza" ]

stop_examples = [ "not hungry anymore" ]

ask_confirm = True

def submit(self, form_data):

# do the actual order here!

return {

"output": f"Pizza order on its way"

}Code language: Python (python)Forms

Handle goal oriented multi turn conversations:

- Gather complex information on autopilot

- Based on pydantic models (types and custom validation)

- Ask for a final confirmation

- Custom submit callback

Latest from Wonderland

-

I tried fine-tuning Llama 3.1 8b and then hooked it up to the Cheshire Cat

Premise As an IT technician with some experience in software development and data management, I’ve only recently ventured into the world of artificial intelligence, particularly in the fine-tuning of language models. This article was born from my hands-on exploration of the Llama 3.1 8B fine-tuning process, with the aim of creating a custom LLM tailored Read more

-

Using multiple Cat instances with the same Ollama instance

If you’ve landed on this guide, you’re probably wondering: how can I use the same Ollama instance to connect it to multiple Cat instances? Using a single Ollama instance allows you to save both memory and money, avoiding the need for powerful hardware to run multiple instances, each with a separate LLM. In this guide, Read more

-

A Python-based Cheshire Cat CLI

What is the Cheshire Cat CLI? The Cheshire Cat CLI (cat_chat.py) is a command line interface designed to allow real-time interaction with the Cheshire Cat AI using Python. This simple tool establishes a WebSocket connection and allows the user to send messages and receive answers from an LLM. It was designed to emphasize the use Read more

Join us at the next Meow Event!

Our growing and active community is hosting many furrmidable events in the Discord server.

Don’t miss the chance to take part to the Cheshire Cat community and save the dates! Meeoow!